【翻译】第三十篇 Statistical engineering links statistical thinking, methods, t

本帖最后由 小编D 于 2011-12-15 17:48 编辑

你好,我是小编H。请对以下文章有翻译兴趣的组员留下你的预计完成时间,并发短信息联系小编H,以便小编登记翻译者信息以及文章最终完成时的奖惩工作。

by Roger W. Hoerl and Ronald D. Snee

The philosopher Thomas Kuhn showed that a way of thinking (a paradigm) lasts until the problems no longer adequately addressed by the paradigm are so important that a new paradigm is needed to deal with that list of unsolved problems.1

Some urgent problems not being adequately addressed by existing statistically based approaches to quality improvement include:

Ensuring statistical projects have high impact. Too often, we experience the phenomenon that "the operation was a success, but the patient died." In other words, our application of statistics is technically impeccable, but it doesn’t actually drive tangible improvement.

Integrating concepts of statistical thinking with the application of statistical methods and tools. A gap or disconnect exists between the two, resulting in poor application of the important principles of statistical thinking and lost opportunities to apply statistical methods effectively. There’s a missing link that will drive proper application of statistical methods based on a solid understanding of statistical thinking principles.

Providing statisticians an opportunity to become true leaders in their organizations, rather than passive consultants.

Therefore, we propose a different paradigm for the quantitative approaches to quality improvement. We call it statistical engineering.

The term statistical engineering has been used before, perhaps most notably by consultant Dorian Shainin, who generally used it to indicate the application of statistical approaches that were ad hoc (but generally worked) rather than based on formal statistical theory.

Conversely, we use the term in its literal sense. Engineering can be defined as the study of how to best use known scientific and mathematical principles for the benefit of mankind. This contrasts with pure science, which attempts to advance our understanding of natural laws and phenomena.

For example, after years of research, a chemist might invent a new marketable substance in the laboratory. Chemical engineers might then determine how to scale up the process to produce this substance commercially, design the plant to manufacture it and identify how the plant should be operated to be profitable.

The statistical engineering discipline would be the study of how to use the principles and techniques of statistical science for the benefit of humankind. From an operational perspective, statistical engineering is defined as the study of how to best use statistical concepts, methods and tools, and integrate them with IT and other relevant sciences to generate improved results.

In other words, engineers—statistical or otherwise—do not focus on advancement of the fundamental laws of science, but rather how these laws might be best used for practical benefit. This is not to say engineers do not research or develop theory. Rather, it suggests engineers’ theoretical developments tend to be oriented toward the question of how to best use known science to benefit society.

We’re not suggesting that society no longer needs research in new statistical techniques for improvement; it does. The balance needed at this time, however, is perhaps 80% for statistics as an engineering discipline and 20% for statistics as a pure science.

For example, new strategies to better use control charts for maximum benefit in healthcare, finance and other service industries are needed even more than additional research on the mathematical properties of control charts themselves. Both are still needed, but we call for a shift in emphasis from the latter to the former.

Statistical engineering example

To provide a tangible example of statistical engineering, consider lean Six Sigma (LSS Critics have pointed out that LSS hasn’t actually invented any new tools, and they are right. This is one of the reasons academia have been much slower to focus attention on LSS than on improvement methods that came with new tools, such as Taguchi Methods.

We believe LSS has not invented any new tools because it is not an example of statistical science, but rather statistical engineering.

For example, per critics’ claims, LSS has taken existing statistical principles and tools and integrated them with other disciplines, such as lean enterprise, quality engineering and operations research, to provide an overall method that generates more dramatic results than had been obtained previously. The novelty of LSS is not in the tools, per se. It is in the way they are integrated, deployed and supported organizationally.

This did not occur by chance, but rather by the careful study of the limitations of previous improvement initiatives and decades of experience with Six Sigma by many organizations that eventually resulted in today’s LSS approach.

This is how statistical engineering works; it makes a formal discipline of how to best use the existing statistical toolkit to drive more dramatic results. In other words, statistical engineering integrates existing theory of the tools themselves with the cumulative learnings from applications in diverse settings to develop a dynamic theory of how to generate improved results.

This theory can then be debated by the profession, researched, tested and improved over time. This is what we have seen with Six Sigma; it has gone from Six Sigma to LSS, has incorporated additional methods (such as simulation) and has progressed from using the measure, analyze, improve, control (MAIC) framework to using the define, measure, analyze, improve and control (DMAIC) framework. Statistical engineering enables us to improve how we make improvements, just as we have seen with Six Sigma.

The missing link

Much has been written in quality literature about statistical thinking,2 which includes the critical principles of a process view of work, systems thinking and the importance of understanding and reducing variation. Ideally, these principles should guide the application of statistical tools.

Too often, however, we have found a disconnect between those who understand these principles and those who apply statistical tools. Some can articulate the principles and explain how they apply to specific situations, but they may struggle with applying statistical tools. This leads to lost opportunities.

Conversely, there are many specialists in statistical tools who don’t seem to understand the principles of statistical thinking. But they apply the tools anyway, which often results in situations where, as noted earlier, "the operation was a success, but the patient died."

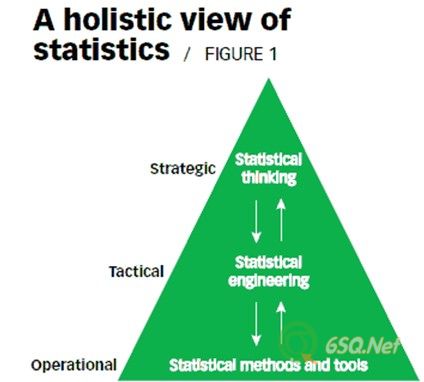

Figure 1

shows how statistical engineering might be the "missing link" to help integrate statistical thinking and statistical tools. Statistical thinking is the strategic aspect of our discipline that provides conceptual understanding and the proper context. It answers the question "Why should we use statistics?" When this context is properly understood, a well-developed discipline of statistical engineering will provide statistically based improvement approaches based on theory and rigorous research.

This provides the tactical aspect of statistics; it answers the question, "What overall approaches should be used?" Individual statistical methods and tools provide the operational aspect and answer the question, "How do we implement these approaches?" The strategic, tactical operational model of leadership has been around since antiquity and applied to the statistics profession at least since 1990.3

Conversely, without statistical engineering, we have a set of proven principles and a set of tools. It is rarely obvious to practitioners how to apply the tools in a way that is consistent with the principles. Statistical engineering can do just that.

For example, statistical thinking includes the key principle of reducing unwanted variation. But, much of historic statistical practice has focused tool usage primarily on improving averages.

Through the rigor of statistical engineering, an overall improvement approach, LSS, was developed that includes the DMAIC roadmap, which shows practitioners how to apply statistical and other tools in such a way as to reduce variation. In other words, the tools are linked to the principles.

Roger W. Hoerl is manager of GE Global Research’s applied statistics lab. He has a doctorate in applied statistics from the University of Delaware in Newark. An ASQ fellow and a recipient of the ASQ Shewhart Medal and Brumbaugh Award, Hoerl is also an academician in the International Academy for Quality.

Ronald D. Snee is president of Snee Associates LLC in Newark, DE. He has a doctorate in applied and mathematical statistics from Rutgers University in New Brunswick, NJ. Snee has received the ASQ Shewhart and Grant medals, is an ASQ fellow and is an academician in the International Academy for Quality.

你好,我是小编H。请对以下文章有翻译兴趣的组员留下你的预计完成时间,并发短信息联系小编H,以便小编登记翻译者信息以及文章最终完成时的奖惩工作。

by Roger W. Hoerl and Ronald D. Snee

The philosopher Thomas Kuhn showed that a way of thinking (a paradigm) lasts until the problems no longer adequately addressed by the paradigm are so important that a new paradigm is needed to deal with that list of unsolved problems.1

Some urgent problems not being adequately addressed by existing statistically based approaches to quality improvement include:

Ensuring statistical projects have high impact. Too often, we experience the phenomenon that "the operation was a success, but the patient died." In other words, our application of statistics is technically impeccable, but it doesn’t actually drive tangible improvement.

Integrating concepts of statistical thinking with the application of statistical methods and tools. A gap or disconnect exists between the two, resulting in poor application of the important principles of statistical thinking and lost opportunities to apply statistical methods effectively. There’s a missing link that will drive proper application of statistical methods based on a solid understanding of statistical thinking principles.

Providing statisticians an opportunity to become true leaders in their organizations, rather than passive consultants.

Therefore, we propose a different paradigm for the quantitative approaches to quality improvement. We call it statistical engineering.

The term statistical engineering has been used before, perhaps most notably by consultant Dorian Shainin, who generally used it to indicate the application of statistical approaches that were ad hoc (but generally worked) rather than based on formal statistical theory.

Conversely, we use the term in its literal sense. Engineering can be defined as the study of how to best use known scientific and mathematical principles for the benefit of mankind. This contrasts with pure science, which attempts to advance our understanding of natural laws and phenomena.

For example, after years of research, a chemist might invent a new marketable substance in the laboratory. Chemical engineers might then determine how to scale up the process to produce this substance commercially, design the plant to manufacture it and identify how the plant should be operated to be profitable.

The statistical engineering discipline would be the study of how to use the principles and techniques of statistical science for the benefit of humankind. From an operational perspective, statistical engineering is defined as the study of how to best use statistical concepts, methods and tools, and integrate them with IT and other relevant sciences to generate improved results.

In other words, engineers—statistical or otherwise—do not focus on advancement of the fundamental laws of science, but rather how these laws might be best used for practical benefit. This is not to say engineers do not research or develop theory. Rather, it suggests engineers’ theoretical developments tend to be oriented toward the question of how to best use known science to benefit society.

We’re not suggesting that society no longer needs research in new statistical techniques for improvement; it does. The balance needed at this time, however, is perhaps 80% for statistics as an engineering discipline and 20% for statistics as a pure science.

For example, new strategies to better use control charts for maximum benefit in healthcare, finance and other service industries are needed even more than additional research on the mathematical properties of control charts themselves. Both are still needed, but we call for a shift in emphasis from the latter to the former.

Statistical engineering example

To provide a tangible example of statistical engineering, consider lean Six Sigma (LSS Critics have pointed out that LSS hasn’t actually invented any new tools, and they are right. This is one of the reasons academia have been much slower to focus attention on LSS than on improvement methods that came with new tools, such as Taguchi Methods.

We believe LSS has not invented any new tools because it is not an example of statistical science, but rather statistical engineering.

For example, per critics’ claims, LSS has taken existing statistical principles and tools and integrated them with other disciplines, such as lean enterprise, quality engineering and operations research, to provide an overall method that generates more dramatic results than had been obtained previously. The novelty of LSS is not in the tools, per se. It is in the way they are integrated, deployed and supported organizationally.

This did not occur by chance, but rather by the careful study of the limitations of previous improvement initiatives and decades of experience with Six Sigma by many organizations that eventually resulted in today’s LSS approach.

This is how statistical engineering works; it makes a formal discipline of how to best use the existing statistical toolkit to drive more dramatic results. In other words, statistical engineering integrates existing theory of the tools themselves with the cumulative learnings from applications in diverse settings to develop a dynamic theory of how to generate improved results.

This theory can then be debated by the profession, researched, tested and improved over time. This is what we have seen with Six Sigma; it has gone from Six Sigma to LSS, has incorporated additional methods (such as simulation) and has progressed from using the measure, analyze, improve, control (MAIC) framework to using the define, measure, analyze, improve and control (DMAIC) framework. Statistical engineering enables us to improve how we make improvements, just as we have seen with Six Sigma.

The missing link

Much has been written in quality literature about statistical thinking,2 which includes the critical principles of a process view of work, systems thinking and the importance of understanding and reducing variation. Ideally, these principles should guide the application of statistical tools.

Too often, however, we have found a disconnect between those who understand these principles and those who apply statistical tools. Some can articulate the principles and explain how they apply to specific situations, but they may struggle with applying statistical tools. This leads to lost opportunities.

Conversely, there are many specialists in statistical tools who don’t seem to understand the principles of statistical thinking. But they apply the tools anyway, which often results in situations where, as noted earlier, "the operation was a success, but the patient died."

Figure 1

shows how statistical engineering might be the "missing link" to help integrate statistical thinking and statistical tools. Statistical thinking is the strategic aspect of our discipline that provides conceptual understanding and the proper context. It answers the question "Why should we use statistics?" When this context is properly understood, a well-developed discipline of statistical engineering will provide statistically based improvement approaches based on theory and rigorous research.

This provides the tactical aspect of statistics; it answers the question, "What overall approaches should be used?" Individual statistical methods and tools provide the operational aspect and answer the question, "How do we implement these approaches?" The strategic, tactical operational model of leadership has been around since antiquity and applied to the statistics profession at least since 1990.3

Conversely, without statistical engineering, we have a set of proven principles and a set of tools. It is rarely obvious to practitioners how to apply the tools in a way that is consistent with the principles. Statistical engineering can do just that.

For example, statistical thinking includes the key principle of reducing unwanted variation. But, much of historic statistical practice has focused tool usage primarily on improving averages.

Through the rigor of statistical engineering, an overall improvement approach, LSS, was developed that includes the DMAIC roadmap, which shows practitioners how to apply statistical and other tools in such a way as to reduce variation. In other words, the tools are linked to the principles.

Roger W. Hoerl is manager of GE Global Research’s applied statistics lab. He has a doctorate in applied statistics from the University of Delaware in Newark. An ASQ fellow and a recipient of the ASQ Shewhart Medal and Brumbaugh Award, Hoerl is also an academician in the International Academy for Quality.

Ronald D. Snee is president of Snee Associates LLC in Newark, DE. He has a doctorate in applied and mathematical statistics from Rutgers University in New Brunswick, NJ. Snee has received the ASQ Shewhart and Grant medals, is an ASQ fellow and is an academician in the International Academy for Quality.

没有找到相关结果

已邀请:

3 个回复

青稞酒 (威望:0) (上海 浦东新区) 电子制造 工程师 - 中级质量工程师,9年质量经验。擅长体系、客诉、现...

赞同来自:

我昨天也翻译了,还需要吗?